Documents

Presentation Slides

DNN-BASED DISTRIBUTED MULTICHANNEL MASK ESTIMATION FOR SPEECH ENHANCEMENT IN MICROPHONE ARRAYS

- Citation Author(s):

- Submitted by:

- Nicolas Furnon

- Last updated:

- 14 May 2020 - 4:09am

- Document Type:

- Presentation Slides

- Document Year:

- 2020

- Event:

- Presenters:

- Nicolas Furnon

- Paper Code:

- 3406

- Categories:

- Log in to post comments

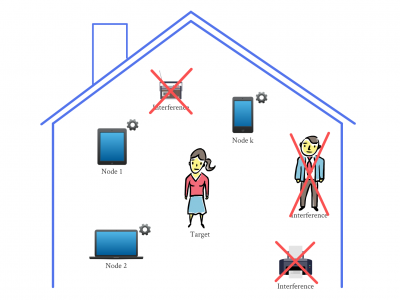

Multichannel processing is widely used for speech enhancement but several limitations appear when trying to deploy these solutions in the real world. Distributed sensor arrays that consider several devices with a few microphones is a viable solution which allows for exploiting the multiple devices equipped with microphones that we are using in our everyday life. In this context, we propose to extend the distributed adaptive node-specific signal estimation approach to a neural network framework. At each node, a local filtering is performed to send one signal to the other nodes where a mask is estimated by a neural network in order to compute a global multichannel Wiener filter. In an array of two nodes, we show that this additional signal can be leveraged to predict the masks and leads to better speech enhancement performance than when the mask estimation relies only on the local signals.