Documents

Poster

EC-NAS: Energy Consumption Aware Tabular Benchmarks for Neural Architecture Search

- Citation Author(s):

- Submitted by:

- Pedram Bakhtiarifard

- Last updated:

- 10 April 2024 - 12:43pm

- Document Type:

- Poster

- Document Year:

- 2024

- Event:

- Presenters:

- Pedram Bakhtiarifard

- Paper Code:

- MLSP-P1.10

- Categories:

- Keywords:

- Log in to post comments

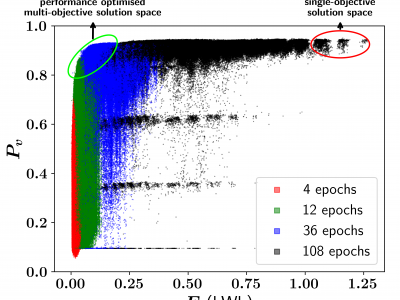

Energy consumption from the selection, training, and deployment of deep learning models has seen a significant uptick recently. This work aims to facilitate the design of energy-efficient deep learning models that require less computational resources and prioritize environmental sustainability by focusing on the energy consumption. Neural architecture search (NAS) benefits from tabular benchmarks, which evaluate NAS strategies cost-effectively through precomputed performance statistics. We advocate for including energy efficiency as an additional performance criterion in NAS. To this end, we introduce an enhanced tabular benchmark encompassing data on energy consumption for varied architectures. The benchmark, designated as EC-NAS, has been made available in an open-source format to advance research in energy-conscious NAS. EC-NAS incorporates a surrogate model to predict energy consumption, aiding in diminishing the energy expenditure of the dataset creation. Our findings emphasize the potential of EC-NAS by leveraging multi-objective optimization algorithms, revealing a balance between energy usage and accuracy. This suggests the feasibility of identifying energy-lean architectures with little or no compromise in performance.