Documents

Presentation Slides

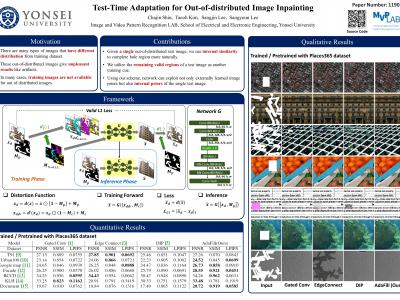

TEST-TIME ADAPTATION FOR OUT-OF-DISTRIBUTED IMAGE INPAINTING

- Citation Author(s):

- Submitted by:

- Chajin Shin

- Last updated:

- 23 September 2021 - 9:32pm

- Document Type:

- Presentation Slides

- Document Year:

- 2021

- Event:

- Presenters:

- Chajin Shin

- Paper Code:

- 1190

- Categories:

- Log in to post comments

Deep-learning-based image inpainting algorithms have shown great performance via powerful learned priors from numerous external natural images. However, they show unpleasant results for test images whose distributions are far from those of the training images because their models are biased toward the training images. In this paper, we propose a simple image inpainting algorithm with test-time adaptation named AdaFill. Given a single out-of-distributed test image, our goal is to complete hole region more naturally than the pre-trained inpainting models. To achieve this goal, we treat the remaining valid regions of the test image as an another training cue because natural images have strong internal similarities. From this test-time adaptation, our network can exploit externally learned image priors from the pre-trained features as well as the internal priors of the test image explicitly. The experimental results show that AdaFill outperforms other models on various out-of-distribution test images. Furthermore, the model named ZeroFill, which is not pre-trained also outperforms the pre-trained models sometimes.