Documents

Presentation Slides

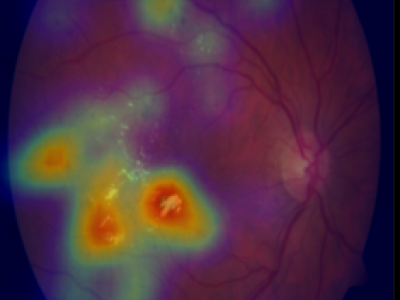

WEAKLY-SUPERVISED LOCALIZATION OF DIABETIC RETINOPATHY LESIONS IN RETINAL FUNDUS IMAGES

- Citation Author(s):

- Submitted by:

- Jan Koehler

- Last updated:

- 16 September 2017 - 10:03am

- Document Type:

- Presentation Slides

- Document Year:

- 2017

- Event:

- Presenters:

- Koehler, Jan

- Paper Code:

- 3463

- Categories:

- Log in to post comments

Convolutional neural networks (CNNs) show impressive performance for image classification and detection, extending heavily to the medical image domain. Nevertheless, medical experts are skeptical in these predictions as the nonlinear multilayer structure resulting in a classification outcome is not directly graspable. Recently, approaches have been shown which help the user to understand the discriminative regions within an image which are decisive for the CNN to conclude to a certain class. Although these approaches could help to build trust in the CNNs predictions, they are only slightly shown to work with medical image data which often poses a challenge as the decision for a class relies on different lesion areas scattered around the entire image. Using the DiaretDB1 dataset, we show that on retina images different lesion areas fundamental for diabetic retinopathy are detected on an image level with high accuracy, comparable or exceeding supervised methods. On lesion level, we achieve few false positives with high sensitivity, though, the network is solely trained on image-level labels which do not include information about existing lesions. Classifying between diseased and healthy images, we achieve an AUC of 0.954 on the DiaretDB1.