Documents

Presentation Slides

An Efficient Alternative to Network Pruning through Ensemble Learning

- Citation Author(s):

- Submitted by:

- Martin Poellot

- Last updated:

- 29 May 2020 - 8:29am

- Document Type:

- Presentation Slides

- Document Year:

- 2020

- Event:

- Presenters:

- Martin Pöllot

- Paper Code:

- 2638

- Categories:

- Log in to post comments

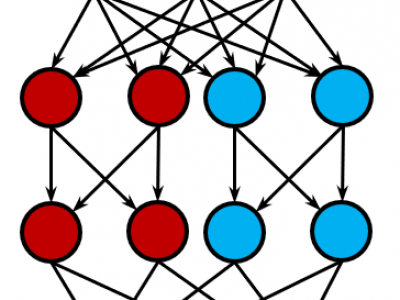

Convolutional Neural Networks (CNNs) currently represent the best tool for classification of image content. CNNs are trained in order to develop generalized expressions in form of unique features to distinguish different classes. During this process, one or more filter weights might develop the same or similar values. In this case, the redundant filters can be pruned without damaging accuracy.Unlike normal pruning methods, we investigate the possibility of replacing a full-sized convolutional neural network with an ensemble of its narrow versions. Empirically, we show that the combination two narrow networks, which has only half of the original parameter count in total, is able to contest and even outperform the full-sized original network. In other words, a pruning rate of 50% can be achieved without any compromise on accuracy. Furthermore, we introduce a novel approach on ensemble learning called ComboNet, which increases the accuracy of the ensemble even further.