Documents

Presentation Slides

ICASSP 2022 - Improved Language Identification Through Cross-Lingual Self-Supervised Learning

- Citation Author(s):

- Submitted by:

- Andros Tjandra

- Last updated:

- 23 June 2022 - 9:15am

- Document Type:

- Presentation Slides

- Document Year:

- 2022

- Event:

- Presenters:

- Andros Tjandra

- Paper Code:

- SPE-30.3

- Categories:

- Log in to post comments

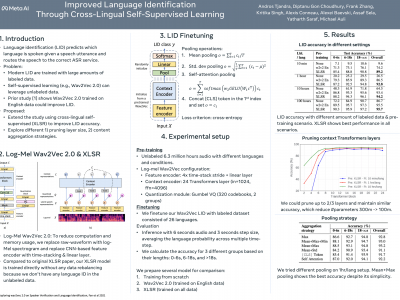

Language identification greatly impacts the success of downstream tasks such as automatic speech recognition. Recently, self-supervised speech representations learned by wav2vec 2.0 have been shown to be very effective for a range of speech tasks. We extend previous self-supervised work on language identification by experimenting with pre-trained models which were learned on real-world unconstrained speech in multiple languages and not just on English. We show that models pre-trained on many languages perform better and enable language identification systems that require very little labeled data to perform well. Results on a 26 languages setup show that with only 10 minutes of labeled data per language, a cross-lingually pre-trained model can achieve over 89.2% accuracy.

Comments

Uploaded ICASSP SPE-30.3

Uploaded ICASSP SPE-30.3 presentation.