Documents

Presentation Slides

A multi-channel/multi-speaker interactive 3D Audio-Visual Speech Corpus in Mandarin

- Citation Author(s):

- Submitted by:

- Rongfeng Su

- Last updated:

- 14 October 2016 - 10:40am

- Document Type:

- Presentation Slides

- Document Year:

- 2016

- Event:

- Presenters:

- Rongfeng Su

- Paper Code:

- 131

- Categories:

- Log in to post comments

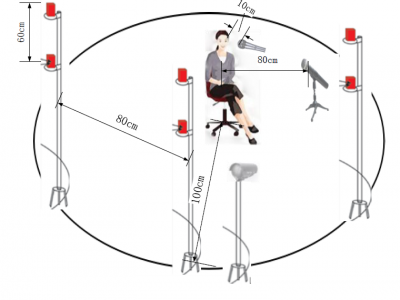

This paper presents a multi-channel/multi-speaker 3D audiovisual

corpus for Mandarin continuous speech recognition and

other fields, such as speech visualization and speech synthesis.

This corpus consists of 24 speakers with about 18k utterances,

about 20 hours in total. For each utterance, the audio

streams were recorded by two professional microphones in

near-field and far-field respectively, while a marker-based 3D

facial motion capturing system with six infrared cameras was

used to acquire the 3D video streams. Besides, the corresponding

2D video streams were captured by an additional camera as

a supplement. A data process is described in this paper for synchronizing

audio and video streams, detecting and correcting

outliers, and removing head motions during recording. Finally,

results about data process are also discussed. As so far, this

corpus is the largest 3D audio-visual corpus for Mandarin.