Documents

Poster

PARAMETRIC HEAR THROUGH EQUALIZATION FOR AUGMENTED REALITY AUDIO

- Citation Author(s):

- Submitted by:

- Rishabh Gupta

- Last updated:

- 8 May 2019 - 6:02am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Rishabh Gupta

- Paper Code:

- 3160

- Categories:

- Log in to post comments

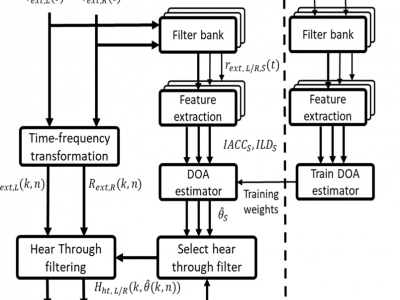

Augmented Reality (AR) audio applications require headphones to be acoustically transparent so that real sounds can pass through unaltered for natural fusion with virtual sounds. In this paper, we consider a multiple source scenario for hear through (HT) equalization (EQ) using closed-back circumaural headsets. AR headset prototype (described in our previous study) is used to capture real sounds from external microphones and compute the directional HT filters using adaptive filtering. This method is best suited for single source scenarios as one best filter corresponding to the estimated source direction is optimally used for HT filtering. In this paper, we propose parametric HT EQ for multiple-source scenarios in time-frequency domain by estimating a sub-band Direction of Arrival (DOA) using neural networks (NN) and selecting the corresponding HT filters from a pre-computed database. Objective analysis using spectral difference (SD) is used to evaluate the performance of different HT EQ filters with open ear scenario used as a reference. Using dummy head measurements with bandlimited pink noise and real source signals, it was found that the proposed integrated system significantly improves the performance over the conventional HT system in multiple source scenarios.