Documents

Research Manuscript

In2Out: Fine-Tuning Video Inpainting Model for Video Outpainting using Hierarchical Discriminator

- DOI:

- 10.60864/z4pm-br39

- Citation Author(s):

- Submitted by:

- Sangwoo Youn

- Last updated:

- 6 July 2025 - 6:50am

- Document Type:

- Research Manuscript

- Document Year:

- 2025

- Event:

- Presenters:

- Sangwoo Youn

- Paper Code:

- 2116

- Categories:

- Log in to post comments

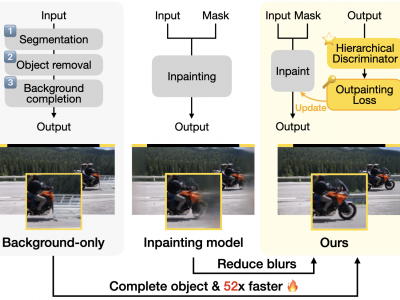

Video outpainting presents a unique challenge of extending the borders while maintaining consistency with the given content. In this paper, we suggest the use of video inpainting models that excel in object flow learning and reconstruction in outpainting rather than solely generating the background as in existing methods. However, directly applying or fine-tuning inpainting models to outpainting has shown to be ineffective, often leading to blurry results. Our extensive experiments on discriminator designs reveal that a critical component missing in the outpainting fine-tuning process is a discriminator capable of effectively assessing the perceptual quality of the extended areas. To tackle this limitation, we differentiate the objectives of adversarial training into global and local goals and introduce a hierarchical discriminator that meets both objectives. Additionally, we develop a specialized outpainting loss function that leverages both local and global features of the discriminator. Fine-tuning on this adversarial loss function enhances the generator's ability to produce both visually appealing and globally coherent outpainted scenes. Our proposed method outperforms state-of-the-art methods both quantitatively and qualitatively. Supplementary materials including the demo video and the code are available in SigPort.