Documents

Presentation Slides

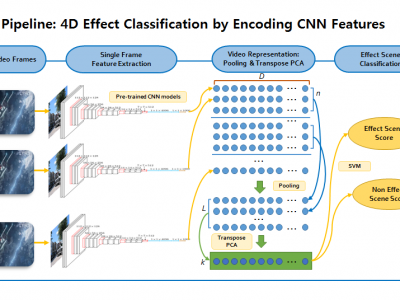

4D Effect Classification by Encoding CNN Features

- Citation Author(s):

- Submitted by:

- Thomhert Siadari

- Last updated:

- 14 September 2017 - 3:13am

- Document Type:

- Presentation Slides

- Document Year:

- 2017

- Event:

- Presenters:

- Thomhert S. Siadari

- Paper Code:

- WP-L6.6

- Categories:

- Log in to post comments

4D effects are physical effects simulated in sync with videos, movies, and games to augment the events occurring in a story or a virtual world. Types of 4D effects commonly used for the immersive media may include seat motion, vibration, flash, wind, water, scent, thunderstorm, snow, and fog. Currently, the recognition of physical effects from a video is mainly conducted by human experts. Although 4D effects are promising in giving immersive experience and entertainment, this manual production has been the main obstacle to faster and wider application of 4D effects. In this paper, we utilize pre-trained models of Convolutional Neural Networks (CNNs) to extract local visual features and propose a new representation method that combines extracted features into video level features. Classification tasks are conducted by employing Support Vector Machine (SVM). Comprehensive experiments are performed to investigate different architecture of CNNs and different type of features for 4D effect classification task and compare baseline average pooling method with our proposed video level representation. Our framework outperforms the baseline up to 2-3% in terms of mean average precision (mAP).