Documents

Poster

Poster

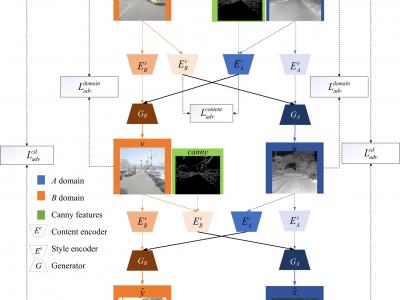

CANNYGAN: Edge-PREserving image translation with disentangled features

- Citation Author(s):

- Submitted by:

- Tianren Wang

- Last updated:

- 20 September 2019 - 11:30am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Tianren Wang

- Paper Code:

- 3562

- Categories:

- Keywords:

- Log in to post comments

The image-to-image translation task often associates with the problem of missing texture and edge information. In this paper, we proposed a framework to translate images while preserving more realistic textures and details. To this end, we disentangle the samples into shared content space and domain-specific style domain. Then, according to the blurred outlines and textures in the source domain, we introduce the classic canny edge detection algorithm to encode the boundary and edge information in the content latent space. We test the proposed method in the thermal to visible image translation scenario and the experimental results demonstrate that the proposed method outperforms several other state-of-the-art models.