Documents

Poster

CONTENT ADAPTIVE VIDEO SUMMARIZATION USING SPATIO-TEMPORAL FEATURES

- Citation Author(s):

- Submitted by:

- Hyunwoo Nam

- Last updated:

- 15 September 2017 - 12:29am

- Document Type:

- Poster

- Document Year:

- 2017

- Event:

- Presenters:

- Hyunwoo Nam

- Paper Code:

- 3574

- Categories:

- Log in to post comments

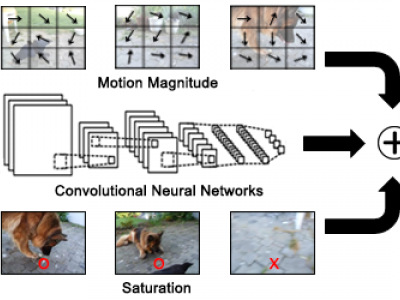

This paper proposes a video summarization method based on novel spatio-temporal features that combine motion magnitude, object class prediction, and saturation. Motion magnitude measures how much motion there is in a video. Object class prediction provides information about an object in a video. Saturation measures the colorfulness of a video. Convolutional neural networks (CNNs) are incorporated for object class prediction. The sum of the normalized features per shot are ranked in descending order, and the summary is determined by the highest ranking shots. This ranking can be conditioned on the object class, and the high-ranking shots for different object classes are also proposed as a summary of the input video. The performance of the summarization method is evaluated on the SumMe datasets, and the results reveal that the proposed method achieves better performance than the summary of worst human and most other state-of-the-art video summarization methods.