Documents

Poster

Detection and Synchronization of Video Sequences for Event Reconstruction

- Citation Author(s):

- Submitted by:

- Marcos Cirne

- Last updated:

- 17 September 2019 - 11:27am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Marcos Cirne

- Paper Code:

- 2463

- Categories:

- Log in to post comments

With an ever-growing amount of unexpected menaces in crowded places such as terrorist attacks, it is paramount to develop techniques to aid investigators reconstructing all details about an event of interest. To extract reliable information about the event, all kinds of available clues must be jointly exploited. As a matter of fact, today's sources of information are plenty and varied, as important events affecting many people are typically documented by different sources. Both witnesses' smartphones and security cameras can provide valuable information coming from multiple viewpoints and time instants --- ``the eyes of the crowd''.

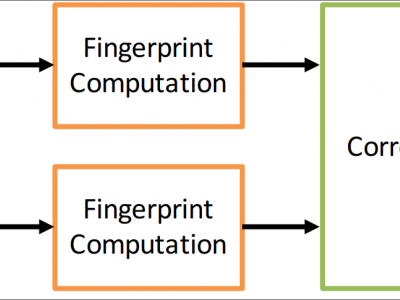

In this paper, we focus on the specific problem of automatically detecting and temporally synchronizing videos depicting the same event of interest. Videos can be either near-duplicates (i.e., edited copies of the same original source) or sequences shot by different users from different vantage points. The proposed method relies upon a video fingerprinting technique capable of describing how video semantic content evolves in time. The solution does not assume a priori information about cameras location, and it only exploits visual cues, not relying on audio channels.