Documents

Poster

Enhancing targeted transferability via suppressing high-confidence labels

- DOI:

- 10.60864/1hq9-z874

- Citation Author(s):

- Submitted by:

- Frank Zeng

- Last updated:

- 17 November 2023 - 12:05pm

- Document Type:

- Poster

- Document Year:

- 2023

- Event:

- Presenters:

- Hui Zeng

- Paper Code:

- MP2.PB.10

- Categories:

- Log in to post comments

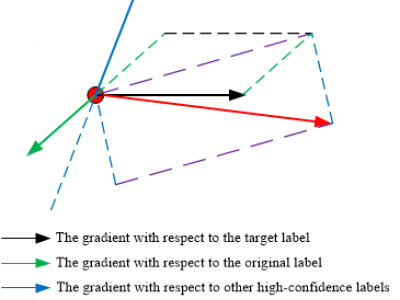

While extensive studies have pushed the limit of the transferability of untargeted attacks, transferable targeted attacks remain extremely challenging. This paper finds that the labels with high confidence in the source model are also likely to retain high confidence in the target model. This simple and intuitive observation inspires us to carefully deal with the high-confidence labels in generating targeted adversarial examples for better transferability. Specifically, we integrate the untargeted loss function into the targeted attack to push the adversarial examples away from the original label while approaching the target label. Furthermore, we suppress other high-confidence labels in the source model with an orthogonal gradient. We validate the proposed scheme by mounting targeted attacks on the ImageNet dataset. Experiments on various scenarios show that our proposed scheme improves the state-of-the-art targeted attacks in transferability. Our code is available at: https://github.com/zengh5/Transferable_targeted_attack.