Documents

Poster

MEMORY-BASED PARAMETERIZED SKILLS LEARNING FOR MAPLESS VISUAL NAVIGATION

- Citation Author(s):

- Submitted by:

- yuyang liu

- Last updated:

- 11 September 2019 - 11:06pm

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Yuyang Liu

- Paper Code:

- 1881

- Categories:

- Log in to post comments

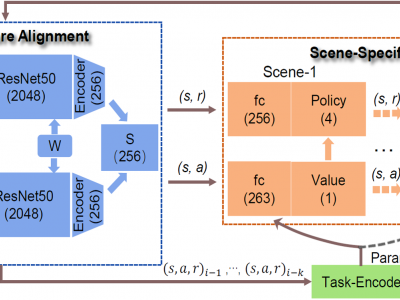

The recently-proposed reinforcement learning for mapless visual navigation can generate an optimal policy for searching different targets. However, most state-of-the-art deep reinforcement learning (DRL) models depend on hard rewards to learn the optimal policy, which can lead to the lack of previous diverse experiences. Moreover, these pre-trained DRL models cannot generalize well to un-trained tasks. To overcome these problems above, in this paper, we propose a Memorybased Parameterized Skills Learning (MPSL) model for mapless visual navigation. The parameterized skills in our MPSL are learned to predict critic parameters for un-trained tasks in actor-critic reinforcement learning, which can be achieved by transferring memory sequence knowledge from long short term memory network. In order to generalize into un-trained tasks, MPSL aims to capture more discriminative features by using a scene-specific layer. Finally, experiment results on an indoor photographic simulation framework AI2THOR demonstrate the effectiveness of our proposed MPSL model, and the generalization ability to un-trained tasks.

Contributions:

1.Parameterizing previous knowledge facilitates generalization to un-trained tasks.

2.Policy parameters are changed with task parameters and entered targets.

3.Feature alignment strategy to learn distinguishable features between different states.