Documents

Presentation Slides

MULTI-QUERY MULTI-HEAD ATTENTION POOLING AND INTER-TOPK PENALTY FOR SPEAKER VERIFICATION

- Citation Author(s):

- Submitted by:

- Min Liu

- Last updated:

- 6 May 2022 - 4:58am

- Document Type:

- Presentation Slides

- Document Year:

- 2022

- Event:

- Presenters:

- Minqiang Xu

- Paper Code:

- SPE-25.5

- Categories:

- Log in to post comments

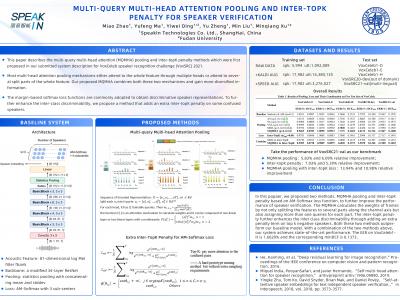

This paper describes the multi-query multi-head attention (MQMHA)

pooling and inter-topK penalty methods which were first proposed in

our submitted system description for VoxCeleb speaker recognition

challenge (VoxSRC) 2021. Most multi-head attention pooling mechanisms either attend to the whole feature through multiple heads or

attend to several split parts of the whole feature. Our proposed

MQMHA combines both these two mechanisms and gain more

diversified information. The margin-based softmax loss functions

are commonly adopted to obtain discriminative speaker representations. To further enhance the inter-class discriminability, we propose

a method that adds an extra inter-topK penalty on some confused

speakers. By adopting both the MQMHA and inter-topK penalty, we

achieved state-of-the-art performance in all of the public VoxCeleb

test sets.