Documents

Poster

MUSIC AUTO-TAGGING WITH ROBUST MUSIC REPRESENTATION LEARNED VIA DOMAIN ADVERSARIAL TRAINING

- DOI:

- 10.60864/xhcs-x570

- Citation Author(s):

- Submitted by:

- Haesun Joung

- Last updated:

- 6 June 2024 - 10:55am

- Document Type:

- Poster

- Document Year:

- 2024

- Event:

- Presenters:

- Haesun Joung

- Paper Code:

- AASP-P12.2

- Categories:

- Log in to post comments

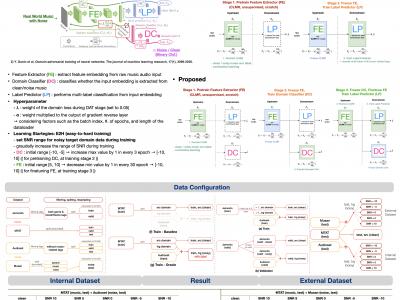

Music auto-tagging is crucial for enhancing music discovery and recommendation. Existing models in Music Information Retrieval (MIR) struggle with real-world noise such as environmental and speech sounds in multimedia content. This study proposes a method inspired by speech-related tasks to enhance music auto-tagging performance in noisy settings. The approach integrates Domain Adversarial Training (DAT) into the music domain, enabling robust music representations that withstand noise. Unlike previous research, this approach involves an additional pretraining phase for the domain classifier, to avoid performance degradation in the subsequent phase. Adding various synthesized noisy music data improves the model’s generalization across different noise levels. The proposed architecture demonstrates enhanced performance in music auto-tagging by effectively utilizing unlabeled noisy music data. Additional experiments with supplementary unlabeled data further improves the model’s performance, underscoring its robust generalization capabilities and broad applicability.