Documents

Presentation Slides

NETWORK PRUNING USING LINEAR DEPENDENCY ANALYSIS ON FEATURE MAPS

- Citation Author(s):

- Submitted by:

- Hao Pan

- Last updated:

- 21 June 2021 - 10:10pm

- Document Type:

- Presentation Slides

- Document Year:

- 2021

- Event:

- Presenters:

- Hao Pan

- Categories:

- Log in to post comments

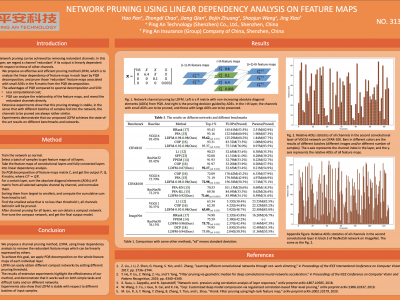

Network pruning can be achieved by removing redundant channels. In this paper, we regard a channel ‘redundant’ if its output is linearly dependent with respect to those of other channels. Inspired by this, we propose an efficient pruning method, named as LDFM, by linear dependency analysis on all the feature maps of each individual layer. Specifically, for each layer, by applying the QR decomposition with column pivoting (PQR) on the matrix consisting of all feature maps, those channels corresponding to small absolute diagonal elements of the R matrix from the PQR decomposition are identified as redundant, and are pruned naturally. Although pruning these channels causes loss of information and hence degrades accuracy, the accuracy of the pruned network can be easily recovered by fine-tuning, as the lost information in the pruned channels can be recovered from that in the retained channels. Extensive experiments demonstrate that LDFM makes great improvement on accuracy with similar parameters and FLOPs as other methods, and achieves the state-of-the-art results on several different benchmarks and networks.