Documents

Presentation Slides

OVERT SPEECH RETRIEVAL FROM NEUROMAGNETIC SIGNALS USING WAVELETS AND ARTIFICIAL NEURAL NETWORKS

- Citation Author(s):

- Submitted by:

- Debadatta Dash

- Last updated:

- 22 November 2018 - 2:18pm

- Document Type:

- Presentation Slides

- Document Year:

- 2018

- Event:

- Presenters:

- Debadatta Dash

- Paper Code:

- BIO-L.4.1

- Categories:

- Keywords:

- Log in to post comments

Speech production involves the synchronization of neural activity between the speech centers of the brain and the oralmotor system, allowing for the conversion of thoughts into

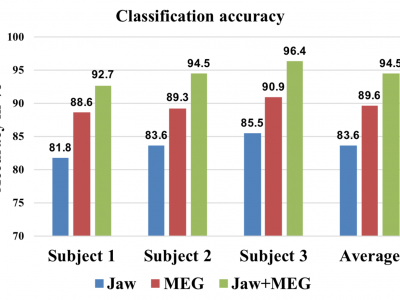

meaningful sounds. This hierarchical mechanism is hindered due to partial or complete paralysis of the articulators for patients suffering from locked-in-syndrome. These patients are in dire need of effective brain-communication interfaces (BCIs), which can at least provide a level of communication assistance. In this study, we tried to decode overt (loud) speech directly from the brain via non-invasive magnetoencephalography (MEG) signals to build the foundation for a faster, direct brain to text mapping BCI. A shallow Artificial Neural Network (ANN) was trained with wavelet features of the MEG signals for this objective. Experimental results show that a direct speech decoding is possible from MEG signals. Moreover, we found that the jaw motion and MEG signals may have complimentary information for speech decoding.