Documents

Poster

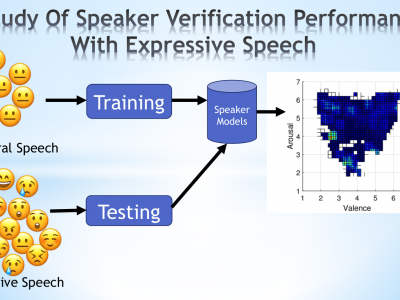

A study of speaker verification performance with expressive speech

- Citation Author(s):

- Submitted by:

- Carlos Busso

- Last updated:

- 20 May 2020 - 10:37am

- Document Type:

- Poster

- Document Year:

- 2017

- Event:

- Presenters:

- Srinivas Parthasarathy

- Categories:

- Log in to post comments

Expressive speech introduces variations in the acoustic features affecting the performance of speech technology such as speaker verification systems. It is important to identify the range of emotions for which we can reliably estimate speaker verification tasks. This paper studies the performance of a speaker verification system as a function of emotions. Instead of categorical classes such as happiness or anger, which have important intra-class variability, we use the continuous attributes arousal, valence, and dominance which facili- tate the analysis. We evaluate an speaker verification system trained with the i-vector framework with a probabilistic linear discriminant analysis (PLDA) back-end. The study relies on a subset of the MSP- PODCAST corpus, which has naturalistic recordings from 40 speak- ers. We train the system with neutral speech, creating mismatches on the testing set. The results show that speaker verification errors increase when the values of the emotional attributes increase. For neutral/moderate values of arousal, valence and dominance, the speaker verification performance are reliable. These results are also observed when we artificially force the sentences to have the same duration.