Documents

Poster

TEXT RECOGNITION IN IMAGES BASED ON TRANSFORMER WITH HIERARCHICAL ATTENTION

- Citation Author(s):

- Submitted by:

- yiwei zhu

- Last updated:

- 18 September 2019 - 10:32am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Paper Code:

- 2933

- Categories:

- Keywords:

- Log in to post comments

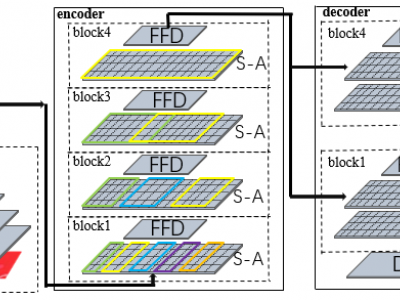

Recognizing text in images has been a hot research topic in computer vision for decades due to its various application. However, the variations in text appearance in term of perspective distortion, text line curvature, text styles, etc., cause great trouble in text recognition. Inspired by the Transformer structure that achieved outstanding performance in many natural language processing related applications, we propose a new Transformer-like structure for text recognition in images, which is referred to as the Hierarchical Attention Transformer Network (HATN). The entire network can be trained end-to-end by using only images and sentence-level annotations. A new hierarchical attention mechanism is proposed to lean the character-level, word-level and sentence-level contexts more efficiently and sufficiently. Extensive experiments on seven public datasets with regular and irregular text arrangements have demonstrated that the proposed HATN can achieve accurate recognition results with high efficiency.