Documents

Poster

Poster

VID2SPEECH: SPEECH RECONSTRUCTION FROM SILENT VIDEO

- Citation Author(s):

- Submitted by:

- Ariel Ephrat

- Last updated:

- 27 February 2017 - 3:05pm

- Document Type:

- Poster

- Document Year:

- 2017

- Event:

- Presenters:

- Ariel Ephrat

- Paper Code:

- 1239

- Categories:

- Keywords:

- Log in to post comments

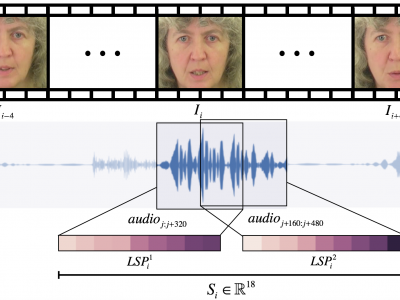

Speechreading is a notoriously difficult task for humans to perform. In this paper we present an end-to-end model based on a convolutional neural network (CNN) for generating an intelligible acoustic speech signal from silent video frames of a speaking person. The proposed CNN generates sound features for each frame based on its neighboring frames. Waveforms are then synthesized from the learned speech features to produce intelligible speech. We show that by leveraging the automatic feature learning capabilities of a CNN, we can obtain state-of-the-art word intelligibility on the GRID dataset, and show promising results for learning out-of-vocabulary (OOV) words.