Documents

Presentation Slides

PROCESSING CONVOLUTIONAL NEURAL NETWORKS ON CACHE

- Citation Author(s):

- Submitted by:

- Joao Vieira

- Last updated:

- 14 May 2020 - 4:32am

- Document Type:

- Presentation Slides

- Document Year:

- 2020

- Event:

- Presenters:

- Joao Vieira

- Paper Code:

- DIS-P2.7

- Categories:

- Log in to post comments

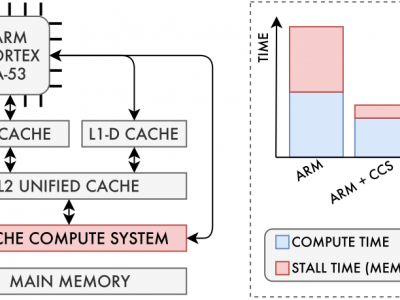

With the advent of Big Data application domains, several Machine Learning (ML) signal-processing algorithms such as Convolutional Neural Networks (CNNs) are required to process progressively larger datasets at a great cost in terms of both compute power and memory bandwidth. Although dedicated accelerators have been developed targeting this issue, they usually require moving massive amounts of data across the memory hierarchy to the processing cores and low-level knowledge of how data is stored in the memory devices to enable in-/near-memory processing solutions. In this paper, we propose and assess a novel mechanism that operates at cache level, leveraging both data-proximity and parallel processing capabilities enabled by dedicated fully-digital vector Functional Units (FUs). We also demonstrate the integration of the mechanism with a conventional Central Processing Unit (CPU). The obtained results show that our engine provides performance improvements on CNNs ranging from 3.92× to 16.6×.