Documents

Poster

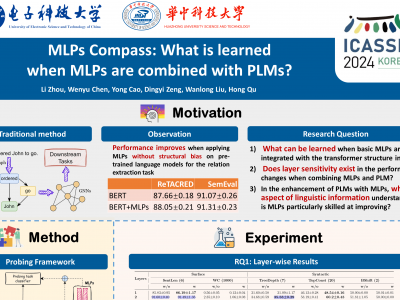

MLPs Compass: What is Learned When MLPs are Combined with PLMs?

- DOI:

- 10.60864/wn1n-hv65

- Citation Author(s):

- Submitted by:

- Li Zhou

- Last updated:

- 6 June 2024 - 10:27am

- Document Type:

- Poster

- Document Year:

- 2024

- Event:

- Presenters:

- Li Zhou

- Paper Code:

- SLP-P33.2

- Categories:

- Keywords:

- Log in to post comments

While Transformer-based pre-trained language models and their variants exhibit strong semantic representation capabilities, the question of comprehending the information gain derived from the additional components of PLMs remains an open question in this field. Motivated by recent efforts that prove Multilayer-Perceptrons (MLPs) modules achieving robust structural capture capabilities, even outperforming Graph Neural Networks (GNNs), this paper aims to quantify whether simple MLPs can further enhance the already potent ability of PLMs to capture linguistic information. Specifically, we design a simple yet effective probing framework containing MLPs components based on BERT structure and conduct extensive experiments encompassing 10 probing tasks spanning three distinct linguistic levels. The experimental results demonstrate that MLPs can indeed enhance the comprehension of linguistic structure by PLMs. Our research provides interpretable and valuable insights into crafting variations of PLMs utilizing MLPs for tasks that emphasize diverse linguistic structures.