Documents

Presentation Slides

BLEND-RES^2NET: Blended Representation Space by Transformation of Residual Mapping with Restrained Learning For Time Series Classification

- Citation Author(s):

- Submitted by:

- Arijit Ukil

- Last updated:

- 22 June 2021 - 6:31am

- Document Type:

- Presentation Slides

- Document Year:

- 2021

- Event:

- Presenters:

- Arijit Ukil

- Paper Code:

- MLSP-29.3

- Categories:

- Keywords:

- Log in to post comments

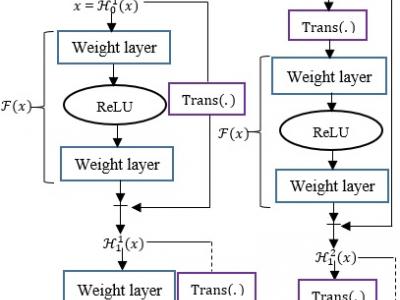

The typical problem like insufficient training instances in time series classification task demands for novel deep neural architecture to warrant consistent and accurate performance. Deep Residual Network (ResNet) learns through H(x)=F(x)+x, where F(x) is a nonlinear function. We propose Blend-Res2Net that blends two different representation spaces: H^1 (x)=F(x)+Trans(x) and H^2 (x)=F(Trans(x))+x with the intention of learning over richer representation by capturing the temporal as well as the spectral signatures (Trans(∙) represents the transformation function). The sophistication of richer representation for better understanding of the complex structure of time series signals, may suffer from higher generalization loss. Hence, the deep network complexity is adapted by proposed novel restrained learning, which introduces dynamic estimation of the network depth. The efficacy validation of Blend-Res2Net is demonstrated by a series of ablation experiments over publicly available benchmark time series archive- UCR. We further demonstrate the superior performance of Blend-Res2Net over baselines and state-of-the-art algorithms including 1-NN-DTW, HIVE-COTE, ResNet, InceptionTime, ROCKET, DMS-CNN, TS-Chief.