Documents

Research Manuscript

Designing Transformer networks for sparse recovery of sequential data using deep unfolding

- Citation Author(s):

- Submitted by:

- Brent De Weerdt

- Last updated:

- 19 May 2023 - 10:59am

- Document Type:

- Research Manuscript

- Document Year:

- 2023

- Event:

- Paper Code:

- MLSP-L7.5

- Categories:

- Log in to post comments

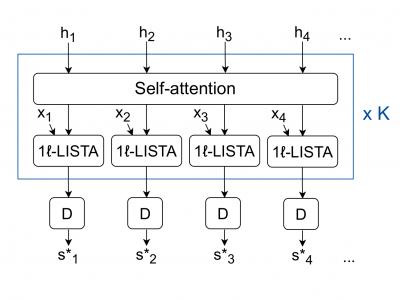

Deep unfolding models are designed by unrolling an optimization algorithm into a deep learning network. These models have shown faster convergence and higher performance compared to the original optimization algorithms. Additionally, by incorporating domain knowledge from the optimization algorithm, they need much less training data to learn efficient representations. Current deep unfolding networks for sequential sparse recovery consist of recurrent neural networks (RNNs), which leverage the similarity between consecutive signals. We redesign the optimization problem to use correlations across the whole sequence, which unfolds into a Transformer architecture. Our model is used for the task of video frame reconstruction from low-dimensional measurements and is shown to outperform state-of-the-art deep unfolding RNN and Transformer models, as well as a traditional Vision Transformer on several video datasets.