Documents

Poster

EQUALIZATION MATCHING OF SPEECH RECORDINGS IN REAL-WORLD ENVIRONMENTS

- Citation Author(s):

- Submitted by:

- Francois Germain

- Last updated:

- 19 March 2016 - 10:11am

- Document Type:

- Poster

- Document Year:

- 2016

- Event:

- Presenters:

- Francois G. Germain

- Categories:

- Log in to post comments

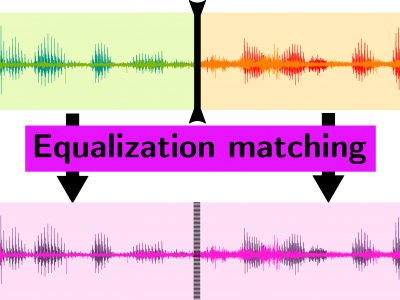

When different parts of speech content such as voice-overs and narration are recorded in real-world environments with different acoustic properties and background noise, the difference in sound quality between the recordings is typically quite audible and therefore undesirable. We propose an algorithm to equalize multiple such speech recordings so that they sound like they were recorded in the same environment. As the timbral content of the speech and background noise typically differ considerably, a simple equalization matching results in a noticeable mismatch in the output signals. A single equalization filter affects both timbres equally and thus cannot disambiguate the competing matching equations of each source. We propose leveraging speech enhancement methods in order to separate speech and background noise, independently apply equalization filtering to each source, and recombine the outputs. By independently equalizing the separated sources, our method is able to better disambiguate the matching equations associated with each source. Therefore the resulting matched signals are perceptually very similar. Additionally, by retaining the background noise in the final output signals, most artifacts from speech enhancement methods are considerably reduced and in general perceptually masked. Subjective listening tests show that our approach significantly outperforms simple equalization matching.