Documents

Presentation Slides

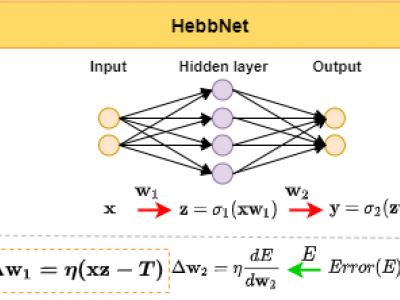

HEBBNET: A SIMPLIFIED HEBBIAN LEARNING FRAMEWORK TO DO BIOLOGICALLY PLAUSIBLE LEARNING

- Citation Author(s):

- Submitted by:

- Manas Gupta

- Last updated:

- 20 August 2021 - 4:08am

- Document Type:

- Presentation Slides

- Document Year:

- 2021

- Event:

- Presenters:

- Manas Gupta

- Categories:

- Log in to post comments

Backpropagation has revolutionized neural network training however, its biological plausibility remains questionable. Hebbian learning, a completely unsupervised and feedback free learning technique is a strong contender for a biologically plausible alternative. However, so far, it has neither achieved high accuracy performance vs. backprop, nor is the training procedure simple. In this work, we introduce a new Hebbian learning based neural network, called Hebb-Net. At the heart of HebbNet is an improved Hebbian approach that includes an updated activation threshold and gradient sparsity to the first principles of Hebbian learning. These enable an efficiently performing Hebbian approach with a simple training procedure. Further to this, the improved Hebbian rule also improves training dynamics by reducing the number of training epochs from 1500 to 200 and making training a one-step process from a two-step process. We also reduce heuristics by reducing hyper-parameters from 5 to 1, and number of search runs for hyper-parameter tuning from 12,600 to 13. Notwithstanding this, HebbNet still achieves strong test performance on MNIST and CIFAR-10 datasets vs. state-of-the-art.