Documents

Poster

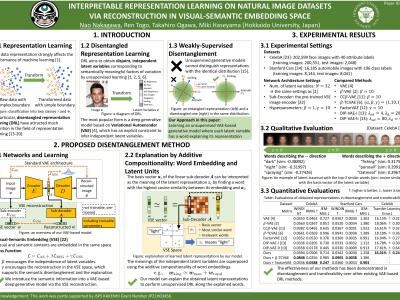

Interpretable representation learning on natural image datasets via reconstruction in visual-semantic embedding space

- Citation Author(s):

- Submitted by:

- Nao Nakagawa

- Last updated:

- 27 September 2021 - 11:29pm

- Document Type:

- Poster

- Document Year:

- 2021

- Event:

- Presenters:

- Nao Nakagawa

- Paper Code:

- ARS-6.3

- Categories:

- Keywords:

- Log in to post comments

Unsupervised learning of disentangled representations is a core task for discovering interpretable factors of variation in an image dataset. We propose a novel method that can learn disentangled representations with semantic explanations on natural image datasets. In our method, we guide the representation learning of a variational autoencoder (VAE) via reconstruction in a visual-semantic embedding (VSE) space to leverage the semantic information of image data and explain the learned latent representations in an unsupervised manner. We introduce a semantic sub-encoder and a linear semantic sub-decoder to learn word vectors corresponding to the latent variables to explain factors of variation in the language form. Each basis vector (column) of the linear semantic sub-decoder corresponds to each latent variable, and we can interpret the basis vectors as word vectors indicating the meanings of the latent representations. By introducing the sub-encoder and the sub-decoder, our model can learn latent representations that are not just disentangled but interpretable. Comparing with other state-of-the-art unsupervised disentangled representation learning methods, we observe significant improvements in the disentanglement and the transferability of latent representations.