Documents

Poster

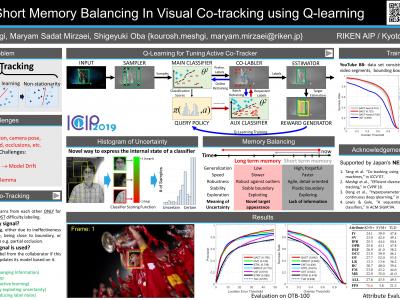

Long & Short Memory Balancing In Visual Co-tracking using Q-learning

- Citation Author(s):

- Submitted by:

- Kourosh Meshgi

- Last updated:

- 20 September 2019 - 9:57am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Kourosh Meshgi, Maryam Sadat Mirzaei

- Paper Code:

- WP.PC.2

- Categories:

- Log in to post comments

Employing one or more additional classifiers to break the self-learning loop in tracing-by-detection has gained considerable attention. Most of such trackers merely utilize the redundancy to address the accumulating label error in the tracking loop, and suffer from high computational complexity as well as tracking challenges that may interrupt all classifiers (e.g. temporal occlusions). We propose the active co-tracking framework, in which the main classifier of the tracker labels samples of the video sequence, and only consults auxiliary classifier when it is uncertain. Based on the source of the uncertainty and the differences of two classifiers (e.g. accuracy, speed, update frequency, etc.), different policies should be taken to exchange information between two classifiers. Here, we introduce a reinforcement learning approach to find the appropriate policy by considering the state of tracker in a specific sequence. The proposed method yields promising results in comparison to the best tracking-by-detection approaches.