Documents

Presentation Slides

Mitigating Data Injection Attacks on Federated Learning

- DOI:

- 10.60864/8ppb-gr70

- Citation Author(s):

- Submitted by:

- Or Shalom

- Last updated:

- 6 June 2024 - 10:32am

- Document Type:

- Presentation Slides

- Document Year:

- 2024

- Event:

- Presenters:

- Or Shalom

- Paper Code:

- SPCOM-L1.4

- Categories:

- Log in to post comments

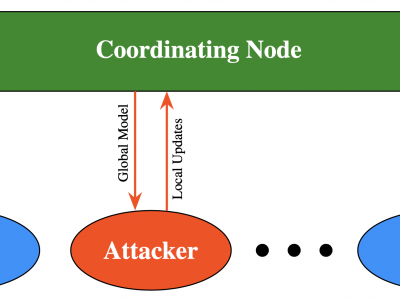

Federated learning is a technique that allows multiple entities to collaboratively train models using their data without compromising data privacy. However, despite its advantages, federated learning can be susceptible to false data injection attacks. In these scenarios, a malicious entity with control over specific agents in the network can manipulate the learning process, leading to a suboptimal model. Consequently, addressing these data injection attacks presents a significant research challenge in federated learning systems. In this paper, we propose a novel approach to detect and mitigate data injection attacks on federated learning systems. Our mitigation strategy is a local scheme, performed during a single instance of training by the coordinating node, allowing for mitigation during the convergence of the algorithm. Whenever an agent is suspected of being an attacker, its data will be ignored for a certain period; this decision will often be re-evaluated. We prove that with probability one, after a finite time, all attackers will be ignored while the probability of ignoring a trustful agent becomes zero, provided that there is a majority of truthful agents. Simulations show that when the coordinating node detects and isolates all the attackers, the model recovers and converges to the truthful model.