Documents

Poster

Neural Network Training Strategy to Enhance Anomaly Detection Performance: A Perspective on Reconstruction Loss Amplification

- DOI:

- 10.60864/xjdh-a913

- Citation Author(s):

- Submitted by:

- YeongHyeon Park

- Last updated:

- 30 March 2024 - 2:57am

- Document Type:

- Poster

- Document Year:

- 2024

- Event:

- Presenters:

- YeongHyeon Park

- Paper Code:

- MLSP-P32.9

- Categories:

- Log in to post comments

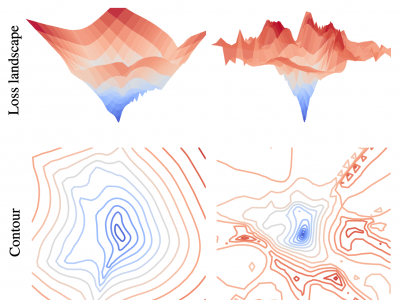

Unsupervised anomaly detection (UAD) is a widely adopted approach in industry due to rare anomaly occurrences and data imbalance. A desirable characteristic of an UAD model is contained generalization ability which excels in the reconstruction of seen normal patterns but struggles with unseen anomalies. Recent studies have pursued to contain the generalization capability of their UAD models in reconstruction from different perspectives, such as design of neural network (NN) structure and training strategy. In contrast, we note that containing of generalization ability in reconstruction can also be obtained simply from steep-shaped loss landscape. Motivated by this, we propose a loss landscape sharpening method by amplifying the reconstruction loss, dubbed Loss AMPlification (LAMP). LAMP deforms the loss landscape into a steep shape so the reconstruction error on unseen anomalies becomes greater. Accordingly, the anomaly detection performance is improved without any change of the NN architecture. Our findings suggest that LAMP can be easily applied to any reconstruction error metrics in UAD settings where the reconstruction model is trained with anomaly-free samples only.