Documents

Presentation Slides

Scalable Mutual Information Estimation using Dependence Graphs

- Citation Author(s):

- Submitted by:

- Morteza Noshad

- Last updated:

- 8 May 2019 - 12:23pm

- Document Type:

- Presentation Slides

- Document Year:

- 2019

- Event:

- Presenters:

- Alfred Hero

- Paper Code:

- 3844

- Categories:

- Log in to post comments

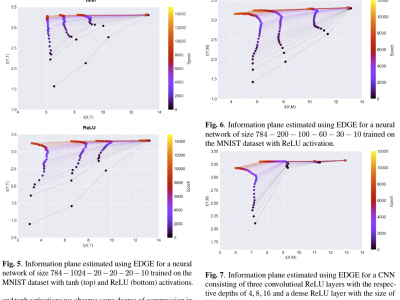

The Mutual Information (MI) is an often used measure of dependency between two random variables utilized in informa- tion theory, statistics and machine learning. Recently several MI estimators have been proposed that can achieve paramet- ric MSE convergence rate. However, most of the previously proposed estimators have high computational complexity of at least O(N2). We propose a unified method for empirical non-parametric estimation of general MI function between random vectors in Rd based on N i.i.d. samples. The re- duced complexity MI estimator, called the ensemble depen- dency graph estimator (EDGE), combines randomized locality sensitive hashing (LSH), dependency graphs, and ensemble bias-reduction methods. We prove that EDGE achieves op- timal computational complexity O(N), and can achieve the optimal parametric MSE rate of O(1/N) if the density is d times differentiable. To the best of our knowledge EDGE is the first non-parametric MI estimator that can achieve paramet- ric MSE rates with linear time complexity. We illustrate the utility of EDGE for the analysis of the information plane (IP) in deep learning. Using EDGE we shed light on a controversy on whether or not the compression property of information bottleneck (IB) in fact holds for ReLu and other rectification functions in deep neural networks (DNN).