Documents

Poster

Self-supervised learning for infant cry analysis

- Citation Author(s):

- Submitted by:

- Arsenii Gorin

- Last updated:

- 23 May 2023 - 11:51am

- Document Type:

- Poster

- Document Year:

- 2023

- Event:

- Presenters:

- Arsenii Gorin

- Paper Code:

- ICASSP-6990

- Categories:

- Log in to post comments

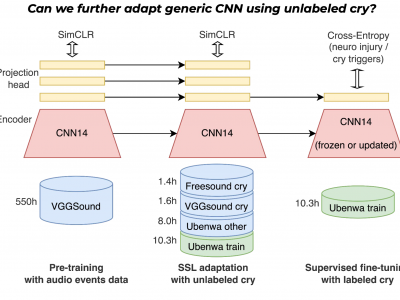

In this paper, we explore self-supervised learning (SSL) for analyzing a first-of-its-kind database of cry recordings containing clinical indications of more than a thousand newborns. Specifically, we target cry-based detection of neurological injury as well as identification of cry triggers such as pain, hunger, and discomfort.

Annotating a large database in the medical setting is expensive and time-consuming, typically requiring the collaboration of several experts over years. Leveraging large amounts of unlabeled audio data to learn useful representations can lower the cost of building robust models and, ultimately, clinical solutions.

In this work, we experiment with self-supervised pre-training of a convolutional neural network on large audio datasets.

We show that pre-training with SSL contrastive loss (SimCLR) performs significantly better than supervised pre-training for both neuro injury and cry triggers. In addition, we demonstrate further performance gains through SSL-based domain adaptation using unlabeled infant cries. We also show that using such SSL-based pre-training for adaptation to cry sounds decreases the need for labeled data of the overall system.