Documents

Poster

TOWARDS GENERATING AMBISONICS USING AUDIO-VISUAL CUE FOR VIRTUAL REALITY

- Citation Author(s):

- Submitted by:

- Aakanksha Rana

- Last updated:

- 7 May 2019 - 1:26pm

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Aakanksha Rana

- Paper Code:

- IVMSP-P5.4

- Categories:

- Log in to post comments

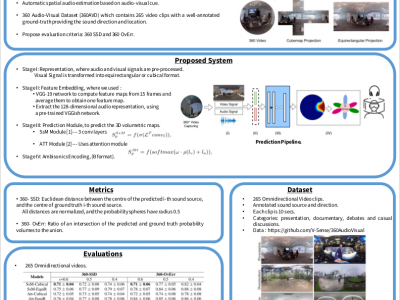

Ambisonics i.e., a full-sphere surround sound, is quintessential with 360° visual content to provide a realistic virtual reality (VR) experience. While 360° visual content capture gained a tremendous boost recently, the estimation of corresponding spatial sound is still challenging due to the required sound-field microphones or information about the sound-source locations. In this paper, we introduce a novel problem of generating Ambisonics in 360° videos using the audiovisual cue. With this aim, firstly, a novel 360° audio-visual video dataset of 265 videos is introduced with annotated sound-source locations. Secondly, a pipeline is designed for an automatic Ambisonic estimation problem. Benefiting from the deep learning based audiovisual feature-embedding and prediction modules, our pipeline estimates the 3D sound-source locations and further use such locations to encode to the B-format. To benchmark our dataset and pipeline, we additionally propose evaluation criteria to investigate the performance using different 360° input representations. Our results demonstrate the efficacy of the proposed pipeline and open up a new area of research in 360° audio-visual analysis for future investigations.