Documents

Poster

Gaussian Process LSTM Recurrent Neural Network Language Models for Speech Recognition

- Citation Author(s):

- Submitted by:

- Max W. Y. Lam

- Last updated:

- 7 May 2019 - 11:49pm

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Max W. Y. Lam

- Paper Code:

- 1296

- Categories:

- Log in to post comments

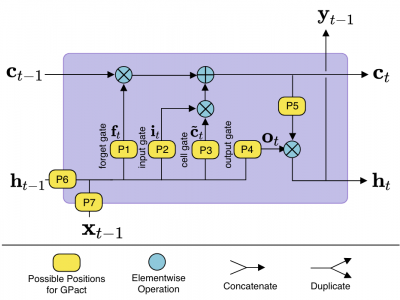

Recurrent neural network language models (RNNLMs) have shown superior performance across a range of speech recognition tasks. At the heart of all RNNLMs, the activation functions play a vital role to control the information flows and tracking longer history contexts that are useful for predicting the following words. Long short-term memory (LSTM) units are well known for such ability and thus widely used in current RNNLMs. However, the deterministic parameter estimates in LSTM RNNLMs are prone to over-fitting and poor generalization when given limited training data. Furthermore, the precise forms of activations in LSTM have been largely empirically set for all cells at a global level. In order to address these issues, this paper introduces Gaussian process (GP) LSTM RNNLMs. In addition to modeling parameter uncertainty under a Bayesian framework, it also allows the optimal forms of gates being automatically learned for individual LSTM cells. Experiments were conducted on three tasks: the Penn Treebank (PTB) corpus, Switchboard conversational telephone speech (SWBD) and the AMI meeting room data. The proposed GP-LSTM RNNLMs consistently outperform the baseline LSTM RNNLMs in terms of both perplexity and word error rate.

IEEE Xplore link: https://ieeexplore.ieee.org/document/8683660