Documents

Poster

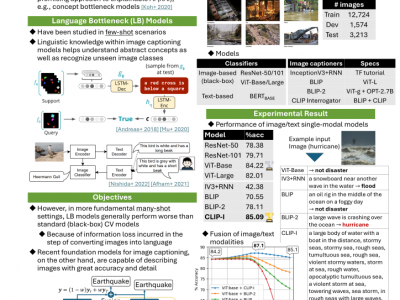

Reading Is Believing: Revisiting Language Bottleneck Models for Image Classification

- DOI:

- 10.60864/n50t-ax16

- Citation Author(s):

- Submitted by:

- Takafumi Koshinaka

- Last updated:

- 29 October 2024 - 2:41am

- Document Type:

- Poster

- Document Year:

- 2024

- Event:

- Presenters:

- Takafumi Koshinaka

- Paper Code:

- MP1.PC.1

- Categories:

- Log in to post comments

We revisit language bottleneck models as an approach to ensuring the explainability of deep learning models for image classification. Because of inevitable information loss incurred in the step of converting images into language, the accuracy of language bottleneck models is considered to be inferior to that of standard black-box models. Recent image captioners based on large-scale foundation models of Vision and Language, however, have the ability to accurately describe images in verbal detail to a degree that was previously believed to not be realistically possible. In a task of disaster image classification, we experimentally show that a language bottleneck model that combines a modern image captioner with a pre-trained language model can achieve image classification accuracy that exceeds that of black-box models. We also demonstrate that a language bottleneck model and a black-box model may be thought to extract different features from images and that fusing the two can create a synergistic effect, resulting in even higher classification accuracy.