- Read more about Robust Self-Supervised Learning With Contrast Samples For Natural Language Understanding

- Log in to post comments

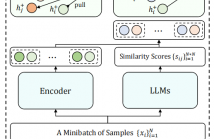

To improve the robustness of pre-trained language models (PLMs), previous studies have focused more on how to efficiently obtain adversarial samples with similar semantics, but less attention has been paid to the perturbed samples that change the gold label. Therefore, to fully perceive the effects of these different types of small perturbations on robustness, we propose a RObust Self-supervised leArning (ROSA) method, which incorporates different types of perturbed samples and the robustness improvements into a unified framework.

- Categories:

27 Views

27 Views

- Read more about Feature Mixing-based Active Learning for Multi-label Text Classification

- Log in to post comments

Active learning (AL) aims to reduce labeling costs by selecting the most valuable samples to annotate from a set of unlabeled data. However, recognizing these samples is particularly challenging in multi-label text classification tasks due to the high dimensionality but sparseness of label spaces. Existing AL techniques either fail to sufficiently capture label correlations, resulting in label imbalance in the selected samples, or suffer significant computing costs when analyzing the informative potential of unlabeled samples across all labels.

- Categories:

24 Views

24 Views