Documents

Presentation Slides

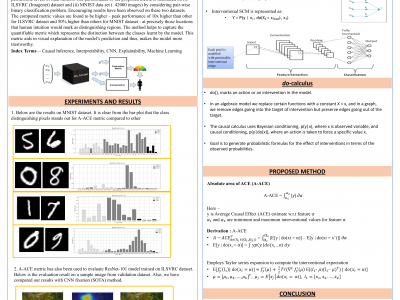

Class Specific Interpretability in CNN Using Causal Analysis

- Citation Author(s):

- Submitted by:

- Ankit Yadu

- Last updated:

- 25 September 2021 - 2:25pm

- Document Type:

- Presentation Slides

- Document Year:

- 2021

- Event:

- Presenters:

- Ankit Yadu

- Paper Code:

- 2719

- Categories:

- Keywords:

- Log in to post comments

A singular problem that mars the wide applicability of machine learning (ML) models is the lack of generalizability and interpretability. The ML community is increasingly working on bridging this gap. Prominent among them are methods that study causal significance of features, with techniques such as Average Causal Effect (ACE). In this paper, our objective is to utilize the causal analysis framework to measure the significance level of the features in binary classification task. Towards this, we propose a novel ACE-based metric called "Absolute area under ACE (A-ACE)" which computes the area of the absolute value of the ACE across different permissible levels of intervention. The performance of the proposed metric is illustrated on MNIST data set (~42000 images) by considering pair-wise binary classification problem. The computed metric values are found to be higher (peak performance of 50% higher than others) at precisely those locations that human intuition would mark as distinguishing regions. The method helps to capture the quantifiable metric which represents the distinction between the classes learnt by the model. This metric aids in visual explanation of the model's prediction and thus, makes the model more trustworthy.