Documents

Poster

Graph Signal Sampling via Reinforcement Learning

- Citation Author(s):

- Submitted by:

- Oleksii Abramenko

- Last updated:

- 30 May 2019 - 10:50am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Presenters:

- Oleksii Abramenko

- Paper Code:

- 3554

- Categories:

- Keywords:

- Log in to post comments

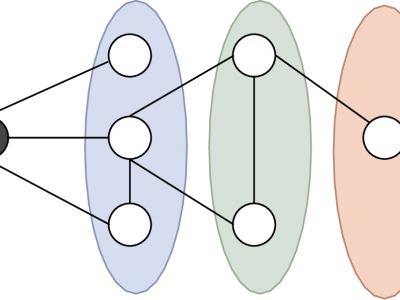

We model the sampling and recovery of clustered graph signals as a reinforcement learning (RL) problem. The signal sampling is carried out by an agent which crawls over the graph and selects the most relevant graph nodes to sample. The goal of the agent is to select signal samples which allow for the most accurate recovery. The sample selection is formulated as a multi-armed bandit (MAB) problem, which lends naturally to learning efficient sampling strategies using the well-known gradient MAB algorithm. In a nutshell, the sampling strategy is represented as a probability distribution over the individual arms of the MAB and optimized using gradient ascent. Some illustrative numerical experiments indicate that the sampling strategies obtained from the gradient MAB algorithm outperform existing sampling methods.