Documents

Poster

Poster

Perceptually-motivated environment-specific speech enhancement

- Citation Author(s):

- Submitted by:

- Jiaqi Su

- Last updated:

- 10 May 2019 - 1:40am

- Document Type:

- Poster

- Document Year:

- 2019

- Event:

- Paper Code:

- 4382

- Categories:

- Log in to post comments

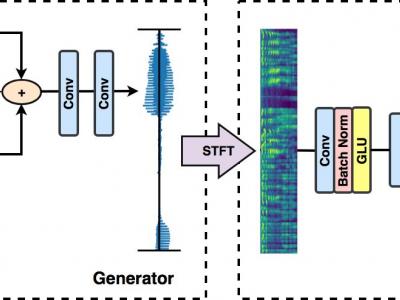

This paper introduces a deep learning approach to enhance speech recordings made in a specific environment. A single neural network learns to ameliorate several types of recording artifacts, including noise, reverberation, and non-linear equalization. The method relies on a new perceptual loss function that combines adversarial loss with spectrogram features. Both subjective and objective evaluations show that the proposed approach improves on state-of-the-art baseline methods.