- Read more about Lossless Compression for Video Streams with Frequency Prediction and Macro Block Merging

- Log in to post comments

Cloud service has been emerging as a promising alternative to handle massive volumes of video sequences triggered by increasing demands on video service, especially surveillance and entertainment. Lossless compression of encoded video bitstreams can further eliminate the redundancies without altering the contents and facilitate the efficiency of cloud storage. In this paper, we propose a novel lossless compression scheme to further compress the video bitstreams generated by the state-of-the-art hybrid coding frameworks like H.264/AVC and HEVC.

- Categories:

50 Views

50 Views- Read more about Modulated Variable-Rate Deep Video Compression

- Log in to post comments

In this work, we propose a variable-rate scheme for deep video compression, which can achieve continuously variable rate by a single model. The key idea is to use the R-D tradeoff parameter \(\lambda\) as the conditional parameter to control the bitrate. The scheme is developed on DVC, which jointly learns motion estimation, motion compression, motion compensation, and residual compression functions. In this framework, the motion and residual compression auto-encoders are critical for the rate adaptation because they generate the final bitstream directly.

- Categories:

83 Views

83 Views

- Read more about Convolutional Neural Network for Image Compression with Application to JPEG Standard

- Log in to post comments

In this paper the authors present a novel structure of convolutional neural network for lossy image compression intended for use as a part of JPEG’s standard image compression stream. The network is trained on randomly selected images from high-quality image dataset of human faces and its effectiveness is verified experimentally using standard test images.

- Categories:

115 Views

115 Views

- Read more about Fast GLCM-based Intra Block Partition for VVC

- Log in to post comments

In the latest video coding standard, Versatile Video Coding (H.266/VVC), a new quadtree with nested multi-type tree (QTMT) coding block structure is proposed. QTMT significantly improves coding performance, but more complex block partitioning structure brings greater computational burden. To solve this problem, a fast intra block partition pattern pruning algorithm is proposed using gray level co-occurrence matrix (GLCM) to calculate texture direction information of coding units, terminating the horizontal or vertical split of the binary tree and the ternary tree in advance.

- Categories:

43 Views

43 Views

- Read more about Lossy Compression for Integrating Event Sensors

- Log in to post comments

- Categories:

44 Views

44 Views

- Read more about Multiscale Point Cloud Geometry Compression

- Log in to post comments

Recent years have witnessed the growth of point cloud based applications for both immersive media as well as 3D sensing for auto-driving, because of its realistic and fine-grained representation of 3D objects and scenes. However, it is a challenging problem to compress sparse, unstructured, and high-precision 3D points for efficient communication. In this paper, leveraging the sparsity nature of the point cloud, we propose a multiscale end-to-end learning framework that hierarchically reconstructs the 3D Point Cloud Geometry (PCG) via progressive re-sampling.

- Categories:

804 Views

804 Views

- Read more about SLFC: Scalable Light Field Coding

- Log in to post comments

Light field imaging enables some post-processing capabilities like refocusing, changing view perspective, and depth estimation. As light field images are represented by multiple views they contain a huge amount of data that makes compression inevitable. Although there are some proposals to efficiently compress light field images, their main focus is on encoding efficiency. However, some important functionalities such as viewpoint and quality scalabilities, random access, and uniform quality distribution have not been addressed adequately.

- Categories:

73 Views

73 Views

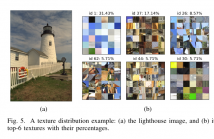

- Read more about JQF: Optimal JPEG Quantization Table Fusion by Simulated Annealing on Texture Images and Predicting Textures

- Log in to post comments

JPEG has been a widely used lossy image compression codec for nearly three decades. The JPEG standard allows to use customized quantization table; however, it's still a challenging problem to find an optimal quantization table within acceptable computational cost. This work tries to solve the dilemma of balancing between computational cost and image specific optimality by introducing a new concept of texture mosaic images.

- Categories:

70 Views

70 Views

- Categories:

105 Views

105 Views