- Read more about An Efficient QP Variable Convolutional Neural Network Based In-loop Filter for Intra Coding

- Log in to post comments

In this paper, a novel QP variable convolutional neural network based in-loop filter is proposed for VVC intra coding. To avoid training and deploying multiple networks, we develop an efficient QP attention module (QPAM) which can capture compression noise levels for different QPs and emphasize meaningful features along channel dimension. Then we embed QPAM into the residual block, and based on it, we design a network architecture that is equipped with controllability for different QPs.

- Categories:

58 Views

58 Views

- Read more about On Intra Video Coding and In-Loop Filtering for Neural Object Detection Networks

- Log in to post comments

Classical video coding for satisfying humans as the final user is a widely investigated field of studies for visual content, and common video codecs are all optimized for the human visual system (HVS). But are the assumptions and optimizations also valid when the compressed video stream is analyzed by a machine? To answer this question, we compared the performance of two state-of-the-art neural detection networks when being fed with deteriorated input images coded with HEVC and VVC in an autonomous driving scenario using intra coding.

- Categories:

43 Views

43 Views

- Read more about Memory Assessment of Versatile Video Coding

- Log in to post comments

This work presents a memory assessment of the next-generation Versatile Video Coding (VVC). The memory analyses are performed adopting as a baseline the state-of-the-art High-Efficiency Video Coding (HEVC). The goal is to offer insights and observations of how critical the memory requirements of VVC are aggravated, compared to HEVC. The adopted methodology consists of two sets of experiments: (1) an overall memory profiling and (2) an inter-prediction specific memory analysis. The results obtained in the memory profiling show that VVC access up to 13.4x more memory than HEVC.

- Categories:

11 Views

11 Views

- Read more about Improving PSNR-Based Quality Metrics Performance for Point Cloud Geometry

- Log in to post comments

An increased interest in immersive applications has drawn attention to emerging 3D imaging representation formats, notably light fields and point clouds (PCs). Nowadays, PCs are one of the most popular 3D media formats, due to recent developments in PC acquisition, namely depth sensors and signal processing algorithms. To obtain high fidelity 3D representations of visual scenes a huge amount of PC data is typically acquired, which demands efficient compression solutions.

- Categories:

53 Views

53 Views

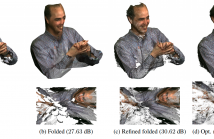

Existing techniques to compress point cloud attributes leverage either geometric or video-based compression tools. We explore a radically different approach inspired by recent advances in point cloud representation learning. Point clouds can be interpreted as 2D manifolds in 3D space. Specifically, we fold a 2D grid onto a point cloud and we map attributes from the point cloud onto the folded 2D grid using a novel optimized mapping method. This mapping results in an image, which opens a way to apply existing image processing techniques on point cloud attributes.

- Categories:

49 Views

49 Views

High-Throughput JPEG2000 (HTJ2K) is a new addition to the JPEG2000 suite of coding tools; it has been recently approved as Part-15 of the JPEG2000 standard, and the JPH file extension has been designated for it. The HTJ2K employs a new “fast” block coder that can achieve higher encoding and decoding throughput than a conventional JPEG2000 (C-J2K) encoder. The higher throughput is achieved because the HTJ2K codec processes wavelet coefficients in a smaller number of steps than C-J2K.

2498.pdf

- Categories:

224 Views

224 Views- Read more about DEPTH MAPS FAST SCALABLE COMPRESSION BASED ON CODING UNIT DEPTH

- Log in to post comments

- Categories:

19 Views

19 Views

- Read more about Semantic Preserving Image Compression

- Log in to post comments

Video traffic comprises a large majority of the total traffic on the internet today. Uncompressed visual data requires a very large data rate; lossy compression techniques are employed in order to keep the data-rate manageable. Increasingly, a significant amount of visual data being generated is consumed by analytics (such as classification, detection, etc.) residing in the cloud. Image and video compression can produce visual artifacts, especially at lower data-rates, which can result in a significant drop in performance on such analytic tasks.

- Categories:

60 Views

60 Views

- Read more about ALTERNATIVE HALF-SAMPLE INTERPOLATION FILTERS FOR VERSATILE VIDEO CODING

- Log in to post comments

To reduce the residual energy of a video signal, motion compensated prediction with fractional-sample accuracy has been successfully employed in modern video coding technology. In contrast to the fixed quarter-sample motion vector resolution for the luma component in High Efficiency Video Coding standard, the current draft of a new Versatile Video Coding standard introduces a block-level adaptive motion vector resolution (AMVR) scheme. The AMVR allows coding of motion vector difference at different precisions.

- Categories:

30 Views

30 Views

- Read more about Non-Experts or Experts? Statistical Analyses of MOS using DSIS Method

- Log in to post comments

In image quality assessments, the results of subjective evaluation experiments that use the double-stimulus impairment scale (DSIS) method are often expressed in terms of the mean opinion score (MOS), which is the average score of all subjects for each test condition. Some MOS values are used to derive image quality criteria, and it has been assumed that it is preferable to perform tests with non-expert subjects rather than with experts. In this study, we analyze the results of several subjective evaluation experiments using the DSIS method.

- Categories:

27 Views

27 Views