- Read more about POLYPHONIC MUSIC SEQUENCE TRANSDUCTION WITH METER-CONSTRAINED LSTM NETWORKS

- Log in to post comments

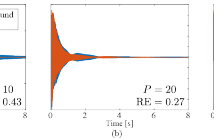

Automatic transcription of polyphonic music remains a challenging task in the field of Music Information Retrieval. In this paper, we propose a new method to post-process the output of a multi-pitch detection model using recurrent neural networks. In particular, we compare the use of a fixed sample rate against a meter-constrained time step on a piano performance audio dataset. The metric ground truth is estimated using automatic symbolic alignment, which we make available for further study.

- Categories:

21 Views

21 Views

- Read more about TRANSCRIBING LYRICS FROM COMMERCIAL SONG AUDIO: THE FIRST STEP TOWARDS SINGING CONTENT PROCESSING

- Log in to post comments

Spoken content processing (such as retrieval and browsing) is maturing, but the singing content is still almost completely left out. Songs are human voice carrying plenty of semantic information just as speech, and may be considered as a special type of speech with highly flexible prosody. The various problems in song audio, for example the significantly changing phone duration over highly flexible pitch contours, make the recognition of lyrics from song audio much more difficult. This paper reports an initial attempt towards this goal.

poster_v4.pdf

- Categories:

13 Views

13 Views

- Read more about EFFECTIVE COVER SONG IDENTIFICATION BASED ON SKIPPING BIGRAMS

- Log in to post comments

So far, few cover song identification systems that utilize index techniques achieve great success. In this paper, we propose a novel approach based on skipping bigrams that could be used for effective index. By applying Vector Quantization, our algorithm encodes signals into code sequences. Then, the bigram histograms of code sequences are used to represent the original recordings and measure their similarities. Through Vector Quantization and skipping bigrams, our model shows great robustness against speed and structure variations in cover songs.

- Categories:

31 Views

31 Views

- Read more about A PARALLEL FUSION APPROACH TO PIANO MUSIC TRANSCRIPTION BASED ON CONVOLUTIONAL NEURAL NETWORK

- Log in to post comments

- Categories:

22 Views

22 Views

- Read more about Bayesian anisotropic Gaussian model for audio source separation

- Log in to post comments

In audio source separation applications, it is common to model the sources as circular-symmetric Gaussian random variables, which is equivalent to assuming that the phase of each source is uniformly distributed. In this paper, we introduce an anisotropic Gaussian source model in which both the magnitude and phase parameters are modeled as random variables. In such a model, it becomes possible to promote a phase value that originates from a signal model and to adjust the relative importance of this underlying model-based phase constraint.

- Categories:

14 Views

14 Views

- Read more about MODAL DECOMPOSITION OF MUSICAL INSTRUMENT SOUND VIA ALTERNATING DIRECTION METHOD OF MULTIPLIERS

- Log in to post comments

For a musical instrument sound containing partials, or modes, the behavior of modes around the attack time is particularly important. However, accurately decomposing it around the attack time is not an easy task, especially when the onset is sharp. This is because spectra of the modes are peaky while the sharp onsets need a broad one. In this paper, an optimization-based method of modal decomposition is proposed to achieve accurate decomposition around the attack time.

- Categories:

31 Views

31 Views

- Read more about COVER SONG IDENTIFICATION USING SONG-TO-SONG CROSS-SIMILARITY MATRIX WITH CONVOLUTIONAL NEURAL NETWORK

- Log in to post comments

In this paper, we propose a cover song identification algorithm using a convolutional neural network (CNN). We first train the CNN model to classify any non-/cover relationship, by feeding a cross-similarity matrix that is generated from a pair of songs as an input. Our main idea is to use the CNN output–the cover-probabilities of one song to all other candidate songs–as a new representation vector for measuring the distance between songs. Based on this, the present algorithm searches cover songs by applying several ranking methods: 1. sorting without using the representation vectors; 2.

- Categories:

167 Views

167 Views

- Read more about COVER SONG IDENTIFICATION USING SONG-TO-SONG CROSS-SIMILARITY MATRIX WITH CONVOLUTIONAL NEURAL NETWORK

- Log in to post comments

In this paper, we propose a cover song identification algorithm using a convolutional neural network (CNN). We first train the CNN model to classify any non-/cover relationship, by feeding a cross-similarity matrix that is generated from a pair of songs as an input. Our main idea is to use the CNN output–the cover-probabilities of one song to all other candidate songs–as a new representation vector for measuring the distance between songs. Based on this, the present algorithm searches cover songs by applying several ranking methods: 1. sorting without using the representation vectors; 2.

- Categories:

37 Views

37 Views

- Read more about PARAMETRIC APPROXIMATION OF PIANO SOUND BASED ON KAUTZ MODEL WITH SPARSE LINEAR PREDICTION

- Log in to post comments

The piano is one of the most popular and attractive musical instruments that leads to a lot of research on it.

To synthesize the piano sound in a computer, many modeling methods have been proposed from full physical models to approximated models. The focus of this paper is on the latter, approximating piano sound by an IIR filter.

- Categories:

13 Views

13 Views

- Read more about InstListener: An Expressive Parameter Estimation System Imitating Human Performances of Monophonic Musical Instruments

- Log in to post comments

We present InstListener, a system that takes an expressive mono- phonic solo instrument performance by a human performer as the input and imitates its audio recordings by using an existing MIDI (Musical Instrument Digital Interface) synthesizer. It automatically analyzes the input and estimates, for each musical note, expressive performance parameters such as the timing, duration, discrete semitone-level pitch, amplitude, continuous pitch contour, and continuous amplitude contour.

- Categories:

11 Views

11 Views