- Read more about CONSENSUS-BASED DISTRIBUTED CLUSTERING FOR IOT

- Log in to post comments

Clustering is a common technique for statistical data analysis and it has been widely used in many fields. When the data is collected via a distributed network or distributedly stored, data analysis algorithms have to be designed in a distributed fashion. This paper investigates data clustering with distributed data. Facing the distributed network challenges including data volume, communication latency, and information security, we here propose a distributed clustering algorithm where each IoT device may have data from multiple clusters.

- Categories:

48 Views

48 Views

- Read more about COOPERATIVE LEARNING VIA FEDERATED DISTILLATION OVER FADING CHANNELS

- Log in to post comments

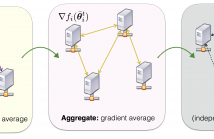

Cooperative training methods for distributed machine learning are typically based on the exchange of local gradients or local model parameters. The latter approach is known as Federated Learning (FL). An alternative solution with reduced communication overhead, referred to as Federated Distillation (FD), was recently proposed that exchanges only averaged model outputs.

- Categories:

9 Views

9 Views

- Read more about Artificial Intelligence based region of interest enhanced video compression

- 1 comment

- Log in to post comments

- Categories:

59 Views

59 Views

- Read more about Non-Asymptotic Rates for Communication Efficient Distributed Zeroth Order Strongly Convex Optimization

- Log in to post comments

This paper focuses on the problem of communication efficient distributed zeroth order minimization of a sum of strongly convex loss functions. Specifically, we develop distributed stochastic optimization methods for zeroth order strongly convex optimization that are based on an adaptive probabilistic sparsifying communications protocol.

- Categories:

13 Views

13 Views

- Read more about Delayed Weight Update for Faster Convergence in Data-parallel Deep Learning

- Log in to post comments

- Categories:

23 Views

23 Views

The present work introduces the hybrid consensus alternating direction method of multipliers (H-CADMM), a novel framework for optimization over networks which unifies existing distributed optimization approaches, including the centralized and the decentralized consensus ADMM. H-CADMM provides a flexible tool that leverages the underlying graph topology in order to achieve a desirable sweet-spot between node-to-node communication overhead and rate of convergence -- thereby alleviating known limitations of both C-CADMM and D-CADMM.

ICASSP2018.pdf

- Categories:

13 Views

13 Views- Read more about TRAINING SAMPLE SELECTION FOR DEEP LEARNING OF DISTRIBUTED DATA

- Log in to post comments

The success of deep learning—in the form of multi-layer neural networks — depends critically on the volume and variety of training data. Its potential is greatly compromised when training data originate in a geographically distributed manner and are subject to bandwidth constraints. This paper presents a data sampling approach to deep learning, by carefully discriminating locally available training samples based on their relative importance.

- Categories:

43 Views

43 Views

- Read more about A Projection-free Decentralized Algorithm for Non-convex Optimization

- Log in to post comments

This paper considers a decentralized projection free algorithm for non-convex optimization in high dimension. More specifically, we propose a Decentralized Frank-Wolfe (DeFW)

algorithm which is suitable when high dimensional optimization constraints are difficult to handle by conventional projection/proximal-based gradient descent methods. We present conditions under which the DeFW algorithm converges to a stationary point and prove that the rate of convergence is as fast as ${\cal O}( 1/\sqrt{T} )$, where

- Categories:

33 Views

33 Views

- Read more about Distributed Sequence Prediction: A consensus+innovations approach

- Log in to post comments

This paper focuses on the problem of distributed sequence

prediction in a network of sparsely interconnected agents,

where agents collaborate to achieve provably reasonable

predictive performance. An expert assisted online learning

algorithm in a distributed setup of the consensus+innovations

form is proposed, in which the agents update their weights

for the experts’ predictions by simultaneously processing the

latest network losses (innovations) and the cumulative losses

obtained from neighboring agents (consensus). This paper

- Categories:

5 Views

5 Views- Read more about Opportunistic Spectrum Access with Temporal-Spatial Reuse in Cognitive Radio Networks

- Log in to post comments

We formulate and study a multi-user multi-armed bandit (MAB) problem that exploits the temporal-spatial reuse of primary user (PU) channels so that secondary users (SUs) who do not interfere with each other can make use of the same PU channel. We first propose a centralized channel allocation policy that has logarithmic regret, but requires a central processor to solve a NP-complete optimization problem at exponentially increasing time intervals.

- Categories:

17 Views

17 Views