- Read more about DIRICHLET PROCESS MIXTURE MODELS FOR CLUSTERING I-VECTOR DATA

- Log in to post comments

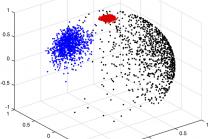

Non-parametric Bayesian methods have recently gained popularity in several research areas dealing with unsupervised learning. These models are capable of simultaneously learning the cluster models as well as their number based on properties of a dataset. The most commonly applied models are using Dirichlet process priors and Gaussian models, called as Dirichlet process Gaussian mixture models (DPGMMs). Recently, von Mises-Fisher mixture models (VMMs) have also been gaining popularity in modelling high-dimensional unit-normalized features such as text documents and gene expression data.

- Categories:

33 Views

33 Views

- Read more about Learning Structural Properties of Wireless Ad-Hoc Networks Non-Parametrically from Spectral Activity Samples

- Log in to post comments

- Categories:

4 Views

4 Views

- Read more about Robust Estimation of Self-Exciting Point Process Models with Application to Neuronal Modeling

- Log in to post comments

We consider the problem of estimating discrete self- exciting point process models from limited binary observations, where the history of the process serves as the covariate. We analyze the performance of two classes of estimators: l1-regularized maximum likelihood and greedy estimation for a discrete version of the Hawkes process and characterize the sampling tradeoffs required for stable recovery in the non-asymptotic regime. Our results extend those of compressed sensing for linear and generalized linear models with i.i.d.

- Categories:

19 Views

19 Views- Read more about Inference of Sparse Gene Regulatory Network from RNA-Seq Time Series Data

- Log in to post comments

- Categories:

3 Views

3 ViewsPages

- « first

- ‹ previous

- 1

- 2

- 3

- 4