- Human Spoken Language Acquisition, Development and Learning (SLP-LADL)

- Language Modeling, for Speech and SLP (SLP-LANG)

- Machine Translation of Speech (SLP-SSMT)

- Speech Data Mining (SLP-DM)

- Speech Retrieval (SLP-IR)

- Spoken and Multimodal Dialog Systems and Applications (SLP-SMMD)

- Spoken language resources and annotation (SLP-REAN)

- Spoken Language Understanding (SLP-UNDE)

Vanishing long-term gradients are a major issue in training standard recurrent neural networks (RNNs), which can be alleviated by long short-term memory (LSTM) models with memory cells. However, the extra parameters associated with the memory cells mean an LSTM layer has four times as many parameters as an RNN with the same hidden vector size. This paper addresses the vanishing gradient problem using a high order RNN (HORNN) which has additional connections from multiple previous time steps.

- Categories:

16 Views

16 Views- Read more about Mongolian Prosodic Phrase Prediction using Suffix Segmentation

- Log in to post comments

Accurate prosodic phrase prediction can improve

the naturalness of speech synthesis. Predicting the prosodic

phrase can be regarded as a sequence labeling problem and

the Conditional Random Field (CRF) is typically used to

solve it. Mongolian is an agglutinative language, in which

massive words can be formed by concatenating these stems

and suffixes. This character makes it difficult to build a

Mongolian prosodic phrase predictions system, based on

CRF, that has high performance. We introduce a new

- Categories:

12 Views

12 Views

- Read more about Investigating Gated Recurrent Neural Networks for Acoustic Modeling

- Log in to post comments

- Categories:

8 Views

8 Views

- Read more about Evaluation of a multimodal 3-d pronunciation tutor for learning Mandarin as a second language: an eye-tracking study

- Log in to post comments

- Categories:

8 Views

8 Views

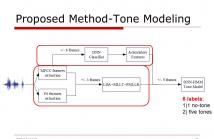

- Read more about Improving Mandarin Tone Recognition Based on DNN by Combining Acoustic and Articulatory Features

- Log in to post comments

- Categories:

33 Views

33 Views- Read more about Evaluation of a multimodal 3-d pronunciation tutor for learning Mandarin as a second language: an eye-tracking study

- Log in to post comments

- Categories:

3 Views

3 Views- Read more about Automatic Mandarin Prosody Boundary Detecting Based on Tone Nucleus Features and DNN Model

- Log in to post comments

- Categories:

14 Views

14 Views

- Read more about A Study on Functional Load of Chinese Prosody Phrase Boundaries under Reduction of Syllable Information

- Log in to post comments

This is overview poster about Functional load of Chinese prosodic boundaries.

- Categories:

7 Views

7 Views- Read more about Recognition of spoken words in L2 speech using L1 probabilistic phonotactics: Evidence from Cantonese-English bilinguals

- Log in to post comments

- Categories:

17 Views

17 ViewsPages

- « first

- ‹ previous

- 1

- 2

- 3