- Read more about COLOR REPRESENTATION IN DEEP NEURAL NETWORKS

- Log in to post comments

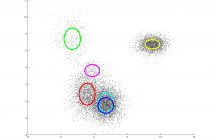

Convolutional neural networks are top-performers on image

classification tasks. Understanding how they make use of

color information in images may be useful for various tasks.

In this paper we analyze the representation learned by a popular

CNN to detect and characterize color-related features.

We confirm the existence of some object- and color-specific

units, as well as the effect of layer-depth on color-sensitivity

and class-invariance.

- Categories:

22 Views

22 Views- Read more about SENTIMENT ANALYSIS WITH RECURRENT NEURAL NETWORK AND UNSUPERVISED NEURAL LANGUAGE MODEL

- Log in to post comments

This paper describes a simple and efficient Neural Language Model approach for text classification that relies only on unsupervised word representation inputs. Our model employs Recurrent Neural Network Long Short-Term Memory (RNN-LSTM), on top of pre-trained word vectors for sentence-level classification tasks. In our hypothesis we argue that using word vectors obtained from an unsupervised neural language model as an extra feature with RNN-LSTM for Natural Language Processing (NLP) system can increase the performance of the system.

- Categories:

434 Views

434 Views- Read more about Dynamic Probabilistic Linear Discriminant Analysis for Face Recognition in Videos

- Log in to post comments

Component Analysis (CA) for computer vision and machine learning comprises of a set of statistical techniques that decompose visual data to appropriate latent components that are relevant to the task-at-hand, such as alignment, clustering, segmentation, classification etc. The past few years we have witnessed an explosion of research in component analysis, introducing both novel deterministic and probabilistic models (e.g., Probabilistic Principal Component Analysis (PPCA), Probabilistic Linear Discriminant Analysis (PLDA), Probabilistic Canonical Correlation Analysis (PCCA) etc.).

- Categories:

26 Views

26 Views- Read more about A Robust FISTA-Like Algorithm

- Log in to post comments

The Fast Iterative Shrinkage-Thresholding Algorithm (FISTA) is regarded as the state-of-the-art among a number of proximal gradient-based methods used for addressing large-scale optimization problems with simple but non-differentiable objective functions. However, the efficiency of FISTA in a wide range of applications is hampered by a simple drawback in the line search scheme. The local estimate of the Lipschitz constant, the inverse of which gives the step size, can only increase while the algorithm is running.

- Categories:

25 Views

25 Views- Read more about LEARNING TIME VARYING GRAPHS

- Log in to post comments

We consider the problem of inferring the hidden structure of high-dimensional

time-varying data. In particular, we aim at capturing

the dynamic relationships by representing data as valued nodes in a

sequence of graphs. Our approach is motivated by the observation

that imposing a meaningful graph topology can help solving the generally

ill-posed and challenging problem of structure inference. To

capture the temporal evolution in the sequence of graphs, we introduce

a new prior that asserts that the graph edges change smoothly

- Categories:

63 Views

63 Views- Read more about LEARNING ROTATION INVARIANCE IN DEEP HIERARCHIES USING CIRCULAR SYMMETRIC FILTERS

- Log in to post comments

Deep hierarchical models for feature learning have emerged as an effective technique for object representation and classification in recent years. Though the features learnt using deep models have shown lot of promise towards achieving invariance to data transformations, this primarily comes at the expense of using much larger training data and model size. In the proposed work we devise a novel technique to incorporate rotation invariance, while training the deep model parameters.

- Categories:

36 Views

36 Views

- Read more about Active Regression with Compressive Sensing based Outlier Mitigation for both Small and Large Outliers

- Log in to post comments

In this paper, a new active learning scheme is proposed for linear

regression problems with the objective of resolving the insufficient

training data problem and the unreliable training data labeling prob-

lem. A pool-based active regression technique is applied to select the

optimal training data to label from the overall data pool. Then, com-

pressive sensing is exploited to remove labeling errors if the errors

are sparse and have large enough magnitudes, which are called large

- Categories:

10 Views

10 Views

Learning parameters from voluminous data can be prohibitive in terms of memory and computational requirements. We propose a "compressive learning" framework where we first sketch the data by computing random generalized moments of the underlying probability distribution, then estimate mixture model parameters from the sketch using an iterative algorithm analogous to greedy sparse signal recovery. We exemplify our framework with the sketched estimation of Gaussian Mixture Models (GMMs).

- Categories:

10 Views

10 Views- Read more about Dictionary Learning from Phaseless Measurements

- Log in to post comments

We propose a new algorithm to learn a dictionary along with sparse representations from signal measurements without phase. Specifically, we consider the task of reconstructing a two-dimensional image from squared-magnitude measurements of a complex-valued linear transformation of the original image. Several recent phase retrieval algorithms exploit underlying sparsity of the unknown signal in order to improve recovery performance.

- Categories:

10 Views

10 Views