- Read more about A Characterization of Stochastic Mirror Descent Algorithms and Their Convergence Properties

- Log in to post comments

Stochastic mirror descent (SMD) algorithms have recently garnered a great deal of attention in optimization, signal processing, and machine learning. They are similar to stochastic gradient descent (SGD), in that they perform updates along the negative gradient of an instantaneous (or stochastically chosen) loss function. However, rather than update the parameter (or weight) vector directly, they update it in a "mirrored" domain whose transformation is given by the gradient of a strictly convex differentiable potential function.

- Categories:

48 Views

48 Views

- Read more about A Fast Method of Computing Persistent Homology of Time Series Data

- Log in to post comments

- Categories:

18 Views

18 Views

- Read more about Pairwise Approximate K-SVD

- Log in to post comments

Pairwise, or separable, dictionaries are suited for the sparse representation of 2D signals in their original form, without vectorization. They are equivalent with enforcing a Kronecker structure on a standard dictionary for 1D signals. We present a dictionary learning algorithm, in the coordinate descent style of Approximate K-SVD, for such dictionaries. The algorithm has the benefit of extremely low complexity, clearly lower than that of existing algorithms.

- Categories:

17 Views

17 Views

- Read more about Exact Recovery by Semidefinite Programming in the Binary Stochastic Block Model with Partially Revealed Side Information

- Log in to post comments

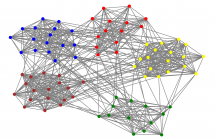

Semidefinite programming has been shown to be both efficient and asymptotically optimal in solving community detection problems, as long as observations are purely graphical in nature. In this paper, we extend this result to observations that have both a graphical and a non-graphical component. We consider the binary censored block model with $n$ nodes and study the effect of partially revealed labels on the performance of semidefinite programming.

- Categories:

59 Views

59 Views

- Read more about Feature Selection for Multi-labeled Variables via Dependency Maximization

- Log in to post comments

Feature selection and reducing the dimensionality of data is an essential step in data analysis. In this work, we propose a new criterion for feature selection that is formulated as conditional information between features given the labeled variable. Instead of using the standard mutual information measure based on Kullback-Leibler divergence, we use our proposed criterion to filter out redundant features for the purpose of multiclass classification.

- Categories:

12 Views

12 Views

- Read more about PRUNING SIFT & SURF FOR EFFICIENT CLUSTERING OF NEAR-DUPLICATE IMAGES

- Log in to post comments

Clustering and categorization of similar images using SIFT and SURF require a high computational cost. In this paper, a simple approach to reduce the cardinality of keypoint set and prune the dimension of SIFT and SURF feature descriptors for efficient image clustering is proposed. For this purpose, sparsely spaced (uniformly distributed) important keypoints are chosen. In addition, multiple reduced dimensional variants of SIFT and SURF descriptors are presented.

- Categories:

26 Views

26 Views

- Read more about Solving Complex Quadratic Equations with Full-rank Random Gaussian Matrices

- Log in to post comments

We tackle the problem of recovering a complex signal $\mathbf{x}\in\mathbb{C}^n$ from quadratic measurements of the form $y_i=\mathbf{x}^*\mathbf{A}_i\mathbf{x}$, where $\{\mathbf{A}_i\}_{i=1}^m$ is a set of complex iid standard Gaussian matrices. This non-convex problem is related to the well understood phase retrieval problem where $\mathbf{A}_i$ is a rank-1 positive semidefinite matrix.

- Categories:

17 Views

17 Views

- Read more about TIME SERIES PREDICTION FOR KERNEL-BASED ADAPTIVE FILTERS USING VARIABLE BANDWIDTH, ADAPTIVE LEARNING-RATE, AND DIMENSIONALITY REDUCTION

- Log in to post comments

Kernel-based adaptive filters are sequential learning algorithms, operating on reproducing kernel Hilbert spaces. Their learning performance is susceptible to the selection of appropriate values for kernel bandwidth and learning-rate parameters. Additionally, as these algorithms train the model using a sequence of input vectors, their computation scales with the number of samples. We propose a framework that addresses the previous open challenges of kernel-based adaptive filters.

- Categories:

12 Views

12 Views

- Read more about Scalable Mutual Information Estimation using Dependence Graphs

- Log in to post comments

The Mutual Information (MI) is an often used measure of dependency between two random variables utilized in informa- tion theory, statistics and machine learning. Recently several MI estimators have been proposed that can achieve paramet- ric MSE convergence rate. However, most of the previously proposed estimators have high computational complexity of at least O(N2). We propose a unified method for empirical non-parametric estimation of general MI function between random vectors in Rd based on N i.i.d. samples.

- Categories:

41 Views

41 Views

- Read more about An Enhanced Hierarchical Extreme Learning Machine with Random Sparse Matrix Based Autoencoder

- Log in to post comments

Recently, by employing the stacked extreme learning machine (ELM) based autoencoders (ELM-AE) and sparse AEs (SAE), multilayer ELM (ML-ELM) and hierarchical ELM (H-ELM) has been developed. Compared to the conventional stacked AEs, the ML-ELM and H-ELM usually achieve better generalization performance with a significantly reduced training time. However, the ℓ1-norm based SAE may suffer the overfitting problem and it is unable to provide analytical solution leading to long training time for big data.

- Categories:

95 Views

95 Views