We present an electrocardiogram (ECG) -based emotion recognition system using self-supervised learning. Our proposed architecture consists of two main networks, a signal transformation recognition network and an emotion recognition network. First, unlabelled data are used to successfully train the former network to detect specific pre-determined signal transformations in the self-supervised learning step.

- Categories:

57 Views

57 Views

- Read more about ICASSP 2020 presentation slide of 'EXTRAPOLATED ALTERNATING ALGORITHMS FOR APPROXIMATE CANONICAL POLYADIC DECOMPOSITION'

- Log in to post comments

Tensor decompositions have become a central tool in machine learning to extract interpretable patterns from multiway arrays of data. However, computing the approximate Canonical Polyadic Decomposition (aCPD), one of the most important tensor decomposition model, remains a challenge. In this work, we propose several algorithms based on extrapolation that improve over existing alternating methods for aCPD.

- Categories:

193 Views

193 Views

- Read more about Generalized Kernel-Based Dynamic Mode Decomposition

- 2 comments

- Log in to post comments

manuscript.pdf

- Categories:

20 Views

20 Views

- Read more about Serious Games and ML for Detecting MCI

- Log in to post comments

Our work has focused on detecting Mild Cognitive Impairment (MCI) by developing Serious Games (SG) on mobile devices, distinct from games marketed as 'brain training' which claim to maintain mental acuity. One game, WarCAT, captures players' moves during the game to infer processes of strategy recognition, learning, and memory. The purpose of our game is to use the generated game-play data combined with machine learning (ML) to help detect MCI. MCI is difficult to detect for several reasons.

- Categories:

238 Views

238 Views

- Read more about Generic Bounds on the Maximum Deviations in Sequential/Sequence Prediction (and the Implications in Recursive Algorithms and Learning/Generalization)

- Log in to post comments

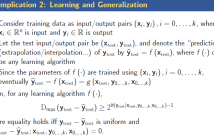

In this paper, we derive generic bounds on the maximum deviations in prediction errors for sequential prediction via an information-theoretic approach. The fundamental bounds are shown to depend only on the conditional entropy of the data point to be predicted given the previous data points. In the asymptotic case, the bounds are achieved if and only if the prediction error is white and uniformly distributed.

- Categories:

54 Views

54 Views

- Read more about Minimax Active Learning via Minimal Model Capacity

- Log in to post comments

Active learning is a form of machine learning which combines supervised learning and feedback to minimize the training set size, subject to low generalization errors. Since direct optimization of the generalization error is difficult, many heuristics have been developed which lack a firm theoretical foundation. In this paper, a new information theoretic criterion is proposed based on a minimax log-loss regret formulation of the active learning problem. In the first part of this paper, a Redundancy Capacity theorem for active learning is derived along with an optimal learner.

- Categories:

98 Views

98 Views

In this paper, we present a novel incremental and decremental learning method for the least-squares support vector machine (LS-SVM). The goal is to adapt a pre-trained model to changes in the training dataset, without retraining the model on all the data, where the changes can include addition and deletion of data samples. We propose a provably exact method where the updated model is exactly the same as a model trained from scratch using the entire (updated) training dataset.

- Categories:

163 Views

163 Views